What is a teachable Machine?

A teachable machine is an AI that adapts a video input created by gestures of a subject and gives an answer depending of the programmed answer by the same user.

An example of a teachable machine would be the one created by Google, which is the most famous.

But how it works, you might ask yourself. Well, I can try to give a proper explanation to you

First of all, all the teachable machines have something in common, a Neural Network.

A Neural Network ,or NNet for abreviation, is considered the brain of the AI behind of it.

The system works like the neurons of the human body.

The information goes trhought an input that sends the information not directly to a random output, but an output with the most appropiate answer.

But, there is always the problem with it, the weight of the input.

The main issue that it creates is the signal strength, which it will become weaker depending of the weight of the information package

The signal, before transporting the information to the most adequate output, it travels throught the layers so it can find the most optimal and fastest path.

With this said, let's go to the composition of the Google Teachable Machine.

The Google Teachable Machine is composed with a library known as TensorFlow.

This library is by definition to perform high numerical computations between a variety of platforms and from computer to mobile devices throught server clusters.

TensorFlow is mainly used on machine learning, deep learning and on the numerical area, scientifical domains.

The library is based on an old Google library,(coded in C++ with Python Bindings), and it's a javascript library.

The ability of the library is to learn on the browser with training sets.

Inside the library, there are the tenseors, which are numerical inputs like numbers, vectos and matrix.

This tensors are used by the GPU, instead of the CPU.

The GPU is used because it can use the WEBGL library, based on OPENGL Technology.

Another component of the library are the Images, which are sets of numbers organized in matrix.

All the pixels of the images, are on RGB Alpha numbers.

With the training sets, that are composed of the images and tensors, the AI have thousands of examples to teach the NNet.

All the layers on a NNet, are different features, which the information will adopt upon contact.

This layers features depends of the programming created with TensorFlow.

A component of the NNet are the sensors, which have to be optimized with functions, to avoid big gaps between image progressions.

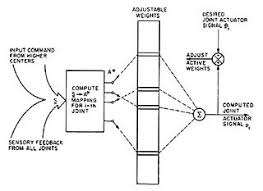

With this said, I will explain a Neural Network example: CMAC Network

The CMAC Network is based on the mammalian cerebellum.

This type of NNet was firstly proposed for robotics, but is mostly used on reinforcement learning and machine learning.

The NNet works with classifying the input on hyper-rectangles, which each hyper-rectangle are associated to a memory cell.

The contents of the memory cells are the weights, which it is adjusted during training.

Usually, more than one quantisation,(which is a large stream of input that has been shortened), of input space is used, which this input space is associated to number of memory cells.

The CMAC ouput is the algebraic sum of the weights of the memory cells.

CMAC example

Because of the low use of CMAC on computing, but mostly used on industrial enviroment, I will try to explain a possible example.

An idea would be to create a NNet that detects the noise and it's deciber state.

The idea would start with creating inputs with different sound examples with a different sound strength.

Upon creation of the weights, the idea would be to quantisize the information obtain via harshing, and create a graphic with it.

Harshing consists of a physical weight influecing over digital weights, overlaping the first one, but not always overlaps.

The process of quantisizing the information is crated with function development, which is mostly based on CMAC.

Upon quantisizing the information of every weights, the idea would be to get a new set of inputs with random sound valors.

Upon obtaining that information, quantisize it and create a graphic with them.

Depending of the quantity of nooise examples obtained, the scale of the graphic will be more or less exact the information.

That is how to create a NNet with CMAC.

And this is all the information that I can explain about teachable machines, Neural Networks and CMAC, an example.